OpenAI’s gpt‑oss Models Now Available on Azure AI Foundry and Windows AI Foundry

In this in-depth news post, author stclarke explores how Microsoft brings OpenAI’s open-weight gpt‑oss models to Azure AI Foundry and Windows AI Foundry, vastly expanding possibilities for developers and enterprises.

OpenAI’s Open‑Source Model: gpt‑oss on Azure AI Foundry and Windows AI Foundry

By stclarke

Overview

With the launch of OpenAI’s gpt‑oss models—its first open-weight release since GPT‑2—Microsoft is enabling developers and enterprises to run, adapt, and deploy OpenAI models entirely on their own terms. This marks a significant milestone, allowing users to run powerful models like gpt‑oss‑120b on a single enterprise GPU or gpt‑oss‑20b locally on supported hardware.

The Rise of Open, Flexible AI Stacks

AI is rapidly evolving from just a software layer to becoming the foundation of the tech stack itself. In response, Microsoft has built a full-stack AI app and agent factory:

- Azure AI Foundry offers a unified platform for building, fine-tuning, and deploying intelligent agents with confidence in the cloud.

- Foundry Local brings open-source AI to the edge, enabling flexible, on-device inferencing across billions of devices.

- Windows AI Foundry integrates these capabilities into Windows 11, supporting secure, low-latency AI development aligned with the Windows platform.

Capabilities of gpt‑oss Models

For the first time, organizations can:

- Run gpt‑oss‑120b (a model with 120 billion parameters) on a single datacenter-class GPU

- Run gpt‑oss‑20b locally, including support for discrete GPUs with 16GB+ VRAM

- Fine-tune, distill, and optimize these models with full transparency and control

- Adapt AI for domain-specific copilots, compress models for offline use, or prototype locally before production deployment

These models are not cut-down replicas; they are designed for real-world performance, cloud-scale reasoning, and edge-based agentic tasks.

Open Models: From Margins to Mainstream

Open models now power a range of solutions—from autonomous agents to domain-specific copilots—and are increasingly becoming a standard in AI deployment. Key advantages provided by Azure AI Foundry include:

- Fine-tuning and Customization: Teams can apply parameter-efficient methods (LoRA, QLoRA, PEFT), integrate proprietary data, and deliver new checkpoints rapidly.

- Resource Optimization: Models can be distilled, quantized, trimmed, or sparsified for efficient edge deployment.

- Inspection and Security: With full weight access, teams can inspect attention patterns for audits, inject domain adapters, retrain layers, and export models for containerized inference.

Azure AI Foundry supports more than 11,000 models, providing a unified place to evaluate, fine-tune, and operationalize AI reliably and securely.

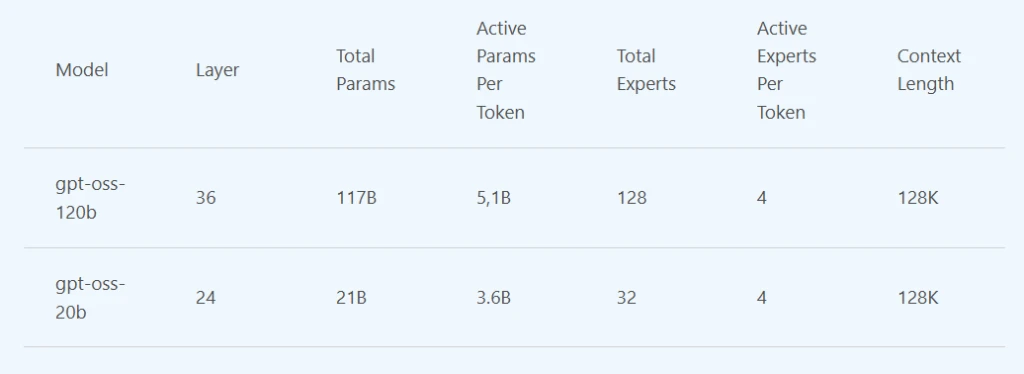

Meet the Models: gpt‑oss‑120b and gpt‑oss‑20b

- gpt‑oss‑120b: A 120B parameter reasoning powerhouse, delivering o4-mini-level performance in a smaller footprint. Ideal for demanding tasks like math, code, and domain-specific Q&A.

- gpt‑oss‑20b: Lightweight, tool-savvy, and optimized for code execution and assistant tasks. Efficient on high-end Windows hardware and soon on MacOS, suitable for embedded and bandwidth-constrained environments.

Both models are API-compatible with the popular responses API, simplifying integration into existing apps.

Hybrid Cloud and Edge AI

- Azure AI Foundry allows fast deployment of inference endpoints and scalable model training pipelines.

- Foundry Local brings open models like gpt-oss-20b to Windows AI Foundry, with support for CPUs, GPUs, and NPUs—enabling truly cloud-optional workflows and privacy-preserving, on-device AI.

Benefits to Developers and Decision Makers

- For Developers: Full transparency and control, the ability to inspect, customize, and deploy models on your preferred hardware.

- For Decision Makers: Greater flexibility, competitive performance, less dependency on proprietary black boxes, and more control over deployment, compliance, and costs.

Commitment to Open and Responsible AI

Microsoft aims to democratize AI by offering both open and proprietary models through its platforms:

- Support for safety, governance, and compliance is built into Foundry

- GitHub Copilot Chat extension is now open source, underpinning VS Code as an open source AI editor

- Integration of research, product, and platform brings cloud and edge breakthroughs to mainstream development

Next Steps and Resources

- Deploy gpt‑oss in the cloud: Use Azure AI Foundry and browse the Azure AI Model Catalog for endpoint deployment.

- Deploy gpt‑oss-20b locally: Follow this QuickStart guide to run on Windows devices (coming soon to MacOS).

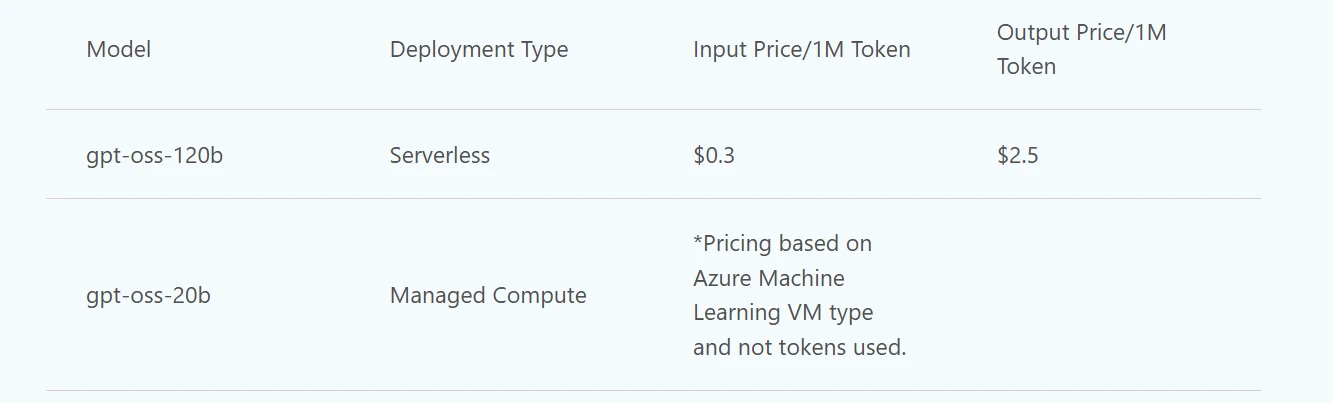

- Pricing: Detailed pricing for managed compute is available here (accurate as of August 2025).

Visual Resources

By embracing gpt‑oss and expanding Foundry’s reach, Microsoft positions Azure as a bridge between open AI research and enterprise-ready deployments—empowering every developer to innovate with confidence.

This post appeared first on “Microsoft News”. Read the entire article here